Claude Built AI for U.S. National Security⚠️

Claude Gov boosts secure AI for defense, ElevenLabs v3 redefines voice realism, and Figma’s MCP Server bridges design to code—AI is transforming security, storytelling, and dev work.

The AI landscape just took a leap forward across three very different but high-impact domains. Anthropic has unveiled Claude Gov, a specialized family of AI models purpose-built for U.S. national security agencies, offering secure, high-performance capabilities in classified environments for missions like threat detection and intelligence analysis. Meanwhile, ElevenLabs has released v3, its most expressive text-to-speech model to date, enabling emotionally rich, multi-language voice synthesis with intricate control over tone and pacing—ideal for gaming, storytelling, and immersive user experiences. On the design-to-code front, Figma introduced the beta of its Dev Mode MCP Server, allowing AI coding tools like Copilot and Claude Code to access live design context and structured metadata, streamlining development and ensuring fidelity between design and implementation. Together, these launches redefine what AI can do—from safeguarding nations to enhancing narratives and revolutionizing developer workflows.

Claude Gov: Secure AI for National Security Missions

Anthropic has introduced Claude Gov, a specialized set of AI models built exclusively for U.S. national security agencies operating in classified environments. Developed with direct input from government partners, these models are designed to handle sensitive tasks like intelligence analysis, threat detection, and strategic planning. They offer improved performance with classified data, better understanding of defense-specific content, enhanced multilingual capabilities, and advanced cybersecurity insights. Claude Gov models meet strict safety standards while addressing the unique demands of national security work, and are already in use at the highest levels of government.

ElevenLabs v3: The Most Expressive AI Voice Model Yet

ElevenLabs v3 (alpha) is the latest and most expressive text-to-speech model, designed to generate natural, emotionally rich, and dynamic speech. With support for over 70 languages and advanced features like inline audio tags, users can control emotion, pacing, delivery, and even audio effects within the generated voice. It enables lifelike storytelling, engaging conversations between multiple speakers, and even playful back-and-forth interruptions, making it ideal for everything from games and narration to support and content creation. The model powers immersive experiences with unmatched realism in tone and delivery, and is available via API for developers.

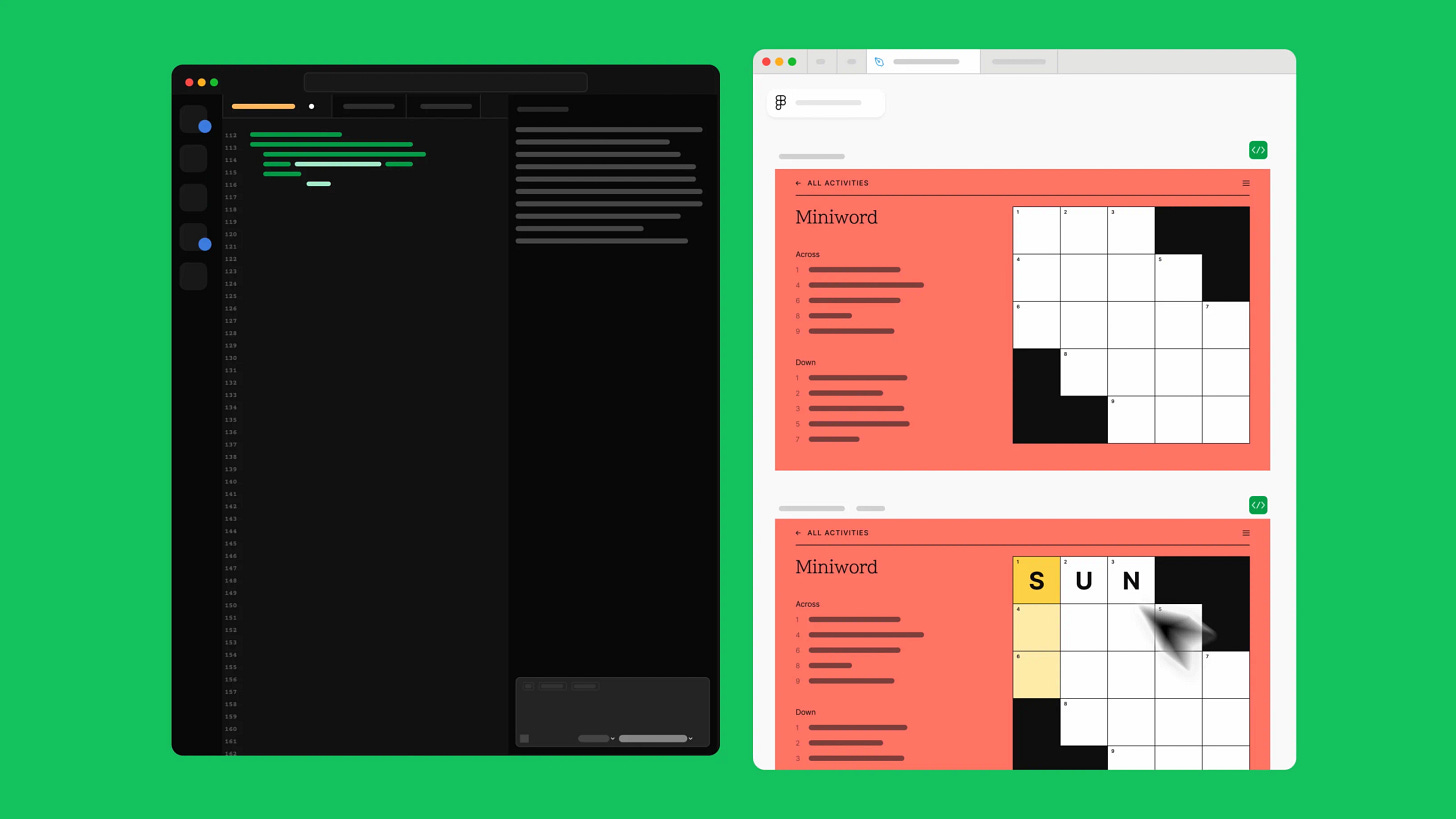

Figma Dev Mode MCP Server: Smarter Design-to-Code Workflows

Figma has launched the beta of its Dev Mode MCP server, a tool that brings live design context directly into developer workflows using Model Context Protocol (MCP). This server enables LLM-powered coding tools like Copilot, Cursor, Windsurf, and Claude Code to generate more accurate, design-informed code by accessing structured design metadata, component references, variables, images, and even interactive code snippets. Instead of relying on screenshots or detached APIs, developers can now align code more precisely with design intent—accelerating workflows and reducing misinterpretation. The server supports customizable responses, integration with existing codebases through Code Connect, and deeper features on the way, including remote server access and expanded interactivity.

Hand Picked Video

In this video, I’ll show you how my AI-powered agent automatically finds and analyzes competitors using Firecrawl, Perplexity Sonar, and OpenAI APIs.

Top AI Products from this week

FuseBase /formerly Nimbus/ - Scattered tools and clunky collaboration cost you time and money. FuseBase unifies internal and external teamwork—powered by AI agents that automate admin work, so you focus on what matters most.

KLING AI - KLING AI, a cutting-edge creative studio by Kuaishou Tech, excels in image and video generation. It ignites creativity through prompts and images, producing realistic visuals with advanced text comprehension, intricate details, and diverse styles.

Skywork Super Agents - Skywork transforms simple prompts into comprehensive, research-backed content delivered in minutes. Our AI agents explore 10× more sources than rivals, producing polished documents, presentations, spreadsheets, podcasts, & webpages for less.

EmotionSense Pro - EmotionSense Pro is a Chrome extension that detects real-time emotions in Google Meet using AI—without sending data to servers. Ideal for UX researchers, recruiters, and educators seeking ethical, local emotion analytics.

VibeKit - VibeKit is an open-source SDK to run coding agents like OpenAI Codex and Claude in secure sandboxes. Let agents write code, install packages, or open PRs safely — with streaming, async tasks, and telemetry built-in. MIT licensed, TypeScript, zero lock-in.

MiniCPM 4.0 - MiniCPM 4.0 is a family of ultra-efficient, open-source models for on-device AI. Offers significant speed-ups on edge chips, strong performance, and includes highly quantized BitCPM versions.

This week in AI

Reimagine Video Like Never Before - Modify Video lets you transform scenes, styles, and characters—while preserving motion and performance. Integrated in Dream Machine. Try now.

Cross the Uncanny Valley - Bland TTS turns text into lifelike speech from just one MP3. Clone voices, control emotion, or build voice apps with their API. Try it free at bland.ai.

Amazon's $10B AI Bet in NC - Amazon to invest $10B in North Carolina data centers, boosting AI infrastructure and creating 500 jobs as part of its $100B AI-focused capital plan.

SmolVLA Open VLA for Robotics - SmolVLA is a compact, open-source Vision-Language-Action model for robotics that runs on consumer hardware and outperforms larger models in real-world tasks.

MIT & NVIDIA Speed Up Robot Planning - New GPU-powered cuTAMP algorithm lets robots solve complex manipulation tasks in seconds by evaluating thousands of motion plans in parallel.